发布时间: 12/30/2025

SIGGRAPH Asia 2025 in Hong Kong: Generative AI Reshapes Game Development

From December 15 to 18, the 18th ACM SIGGRAPH Asia Conference and Exhibition on Computer Graphics and Interactive Techniques (SIGGRAPH Asia 2025) took place at the Hong Kong Convention and Exhibition Centre (HKCEC). Recognized as one of the world’s premier academic events in computer graphics and interactive technologies, this year’s edition ran under the theme “Generative Renaissance”, spotlighting how AI is reinventing the creative industries in very real, production-ready ways. More than 7,000 attendees and over 600 speakers from around the globe joined the event, while tech giants such as Tencent, Huawei and Adobe showcased their latest breakthroughs in generative AI, neural graphics and real-time rendering.

On site, Tencent hosted a series of technical sessions under the umbrella topic “Neural Graphics and Generative AI”, sharing its newest results in areas like 3D world models and large-scale perception. The talks laid out a clear arc from cutting-edge papers to AI-powered products serving hundreds of millions of users. Within this program, Tencent Games presented nine accepted research papers and three focused talks, all circling around how AI can reinvent asset production, engine rendering pipelines and intelligent tools. Across these sessions, Tencent’s AI researchers and global experts in computer graphics and game development exchanged concrete ideas on how to embed AI deeply into next‑generation production workflows.

AI Reshaping Game Production Pipelines

Tencent Games’ technical team unveiled the paper “Imaginarium: Vision-guided High-Quality Scene Layout Generation”, officially presented in the Technical Papers track. The work directly addresses a long-standing pain point in open-world game development: the huge amount of time and labor spent arranging non-core areas such as city streets, foliage, and background architecture. To tackle this, the team proposes a vision-guided method for generating high-quality 3D scene layouts that respect both artistic constraints and gameplay logic.

According to Tencent Games technical expert Zhu Xiaoming, Imaginarium is an AI-powered intelligent scene generation system designed as a practical tool for game artists, not just a tech demo. It can understand natural language instructions and then “think like a level designer,” automatically producing 3D layouts that fit a designated art style while keeping composition, spacing and gameplay flow consistent. This makes it especially valuable for rapidly building large non-key regions on open-world maps, so that art teams can spend more time polishing core story locations and hero assets instead of repetitive layout work.

Zhu explained in his talk on “Imaginarium: Vision-guided High-Quality Scene Layout Generation” that the real breakthrough lies in how the system reasons about scene intent. It does not simply learn where objects are usually placed; it tries to infer why they should be placed that way. By modeling scene logic and narrative goals, the system performs a kind of “slow thinking” similar to human experts, making its layouts more believable and more closely aligned with the game’s storytelling needs.

One demo, for instance, showed the result generated from the prompt: *“a cozy living room with a comfortable armchair, a gallery wall and a stylish coffee table.”* The system automatically arranged furniture, decorations and lighting in a layout that felt both natural and aesthetically pleasing, as if a professional interior artist had sketched it by hand.

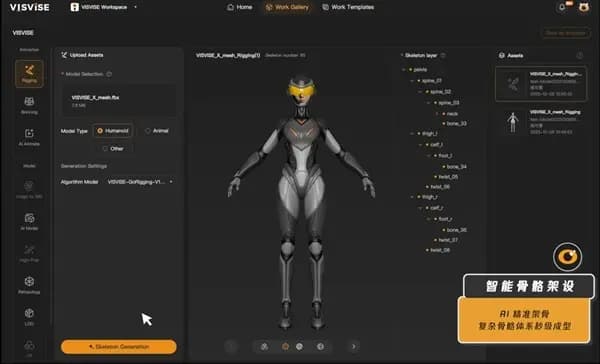

After debuting a full-link AI animation solution at Gamescom in Cologne this August, Tencent’s VISVISE team arrived at SIGGRAPH Asia 2025 with an upgraded, production-ready AI end-to-end 3D animation pipeline. VISVISE AI Animation Lead Zeng Zijiao delivered a talk titled “Breathing Life into Geometry: An End-to-End AI Pipeline for 3D Character Animation”, walking attendees through the latest iterations of the core technologies and how they plug into real game projects.

Zeng explained that the new pipeline builds on the original full-link solution by tightly integrating four key modules: skeleton generation, intelligent skinning, 3D animation generation and smart in-betweening. Together, they form what Tencent describes as the industry’s first full-process AI pipeline for 3D character animation, with outputs that can be directly imported into existing game production workflows. This means teams can dramatically shorten animation production cycles without rewriting their tools from scratch. At the booth, VISVISE set up a hands-on product demo zone, where attendees could watch the complete flow—from raw character mesh to final playable animation—running in real time.

Today, VISVISE’s AI animation solutions are already in use on nearly 100 game projects, including “Peacekeeper Elite,” “Honor of Kings,” “PUBG Mobile,” “Golden Spatula Battle” and “League of Legends: Wild Rift.” Internal data shows that the pipeline has boosted production efficiency by more than eight times, freeing animators from repetitive tasks so they can focus on nuanced motion direction and performance.

A workflow diagram of the VISVISE pipeline was also showcased, visually walking visitors through each AI-assisted step, from rigging and skinning to motion generation and refinement.

Another live demo highlighted the intelligent skeleton rigging process in action, with the system automatically generating and adjusting bone structures for different character models while users watched the “generating” status update in real time.

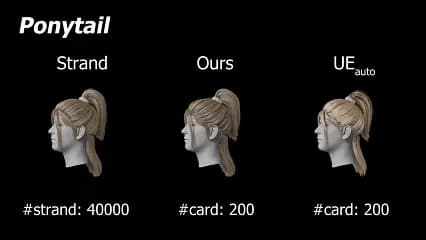

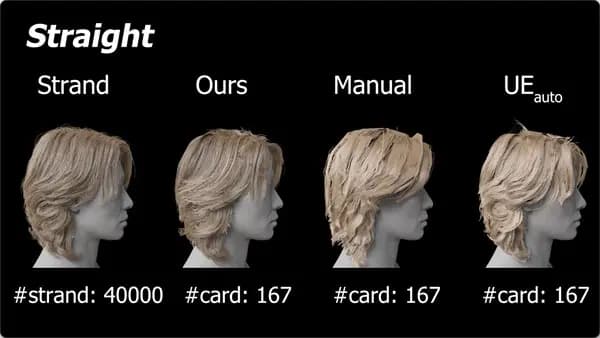

Beyond tools and AI-based rendering innovations, Tencent Games’ Photon Studio Group experts Wu Kui and Zheng Zhongtian shared a Technical Papers session titled “Automatic Character Hair Card Extraction with Differentiable Rendering.” Their presentation covered multiple stages—asset decomposition, preprocessing, and differentiable rendering—to explain how their method automatically extracts hair cards for characters. The approach preserves visual quality while cutting down repetitive manual steps in specific phases of art production, giving artists more room to fine-tune styling instead of manually rebuilding similar assets again and again.

During their talk on “Automatic Character Hair Card Extraction with Differentiable Rendering,” the two speakers showed side‑by‑side results comparing traditional workflows with their automated solution, making it clear how much time can be saved without sacrificing detail or realism.

Smarter 3D Modeling: Geometry, Topology and AI

When it comes to 3D modeling for games, traditional multi-view generation methods often struggle with poor geometric consistency and difficulties in representing complex topologies. To address these long-standing issues, Tencent technical expert Weikai Chen and his collaborators introduced SPGen, a spherical projection–based method for 3D shape generation, detailed in the paper “Spherical Projection as Consistent and Flexible Representation for Single Image 3D Shape Generation.” They also shared their work and experimental results during the conference.

SPGen works by projecting an object’s surface onto an enclosing sphere, elegantly avoiding the view-inconsistency issues common in classical multi-view generation pipelines. This spherical representation allows the system to accurately reconstruct intricate geometric structures, while leveraging strong diffusion priors to significantly boost model accuracy and generalization. At the same time, SPGen drastically reduces GPU requirements for training: the full model can be trained with just two GPUs totaling 96 GB of memory. For game developers, this means higher modeling quality and efficiency without needing a massive compute farm, making advanced 3D shape generation more accessible to mid-sized teams.

Another paper, led by Tencent expert Du Xingyi, focused on “Lifted Surfacing of Generalized Sweep Volumes”, a novel framework for generating surfaces of complex 3D swept volumes. The method is rooted in a mathematically precise formulation that automatically constructs closed, self-intersection-free boundary surfaces. For developers working with intricate shapes, this translates to cleaner geometry and fewer artifacts in downstream simulations and rendering.

By improving modeling accuracy, especially in scenes with challenging geometric and topological features, this technology offers more reliable support for designing complex virtual environments and in-game props. In practice, it enables teams to build dense, detailed worlds more efficiently, offering a stronger toolkit for studios pushing toward higher-fidelity, AI-assisted game content.

AI + Game Engines: Lighting, Animation and Rendering Efficiency

On the engine side, Tencent Games presented results spanning lighting, animation, physics simulation, geometry processing and real-time rendering—core modules that collectively define how modern games look and feel. The central idea running through all these projects is how to use AI in game engines to deliver more immersive, smoother and more responsive gameplay experiences.

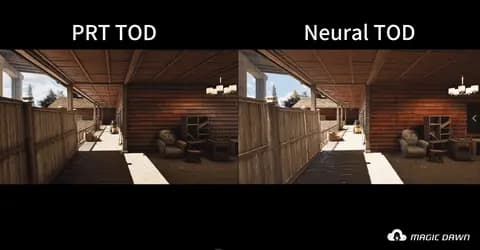

As real-time graphics continues to evolve, AI-driven rendering inside games has become a major industry focus, especially for studios chasing “next-gen” visuals on both PC and mobile. Tencent Games’ Head of Advanced Rendering R&D, Li Chao, shared the team’s latest progress in in-game rendering technology and gave a deep breakdown of MagicDawn, Tencent’s in-house, cross-engine global illumination and lighting solution.

In his session “Frontier Explorations in Rendering Technology and Game Practice—Breaking Audio-Visual Boundaries for Next-Gen Interactive Experiences,” Li walked through MagicDawn’s core architecture. The solution is designed to be engine-agnostic, supports dynamic day–night cycles, and uses AI to dramatically compress data size while improving baking and runtime efficiency. In production tests, MagicDawn reduced light baking times for large-scale games from several days down to just a few hours. At the same time, it enabled mobile titles to achieve lighting and shadow quality close to that of big-budget AAA productions.

By directly addressing industry-wide bottlenecks in global illumination—such as limited lighting quality and long authoring times—MagicDawn provides an end-to-end answer that covers everything from tech selection to large-scale deployment. The system also reflects Tencent’s deep experience in industrial-grade lighting pipelines. Currently, MagicDawn has already been successfully deployed in games like “Arena Breakout,” “Arena Breakout Infinite,” “Wuthering Waves,” and “World of Rock Kingdom.”

One demo reel at the booth showcased intelligent rendering scenarios powered by AI, where lighting, shading and post-processing worked together to deliver stable, high-fidelity images under tight frame-time budgets.

In the animation system track, experts from Tencent Games’ Aurora Studio Group—Xilei Wei, Lang Xu and Yeshuang Lin—jointly presented with a team from Zhejiang University on their research “Ultrafast and Controllable Online Motion Retargeting for Game Scenarios.” The work introduces a novel “semantics-aware geometric representation” that allows a single set of actions to be rapidly and naturally reused across characters with very different body shapes and proportions.

Their method achieves a per-frame processing time of just 0.13 milliseconds, while enabling precise control over hand and foot contact points as well as weapon interactions. For large-scale game worlds populated with many unique characters, this technology becomes a key enabler—teams can produce rich, responsive motion across entire crowds without manually tailoring animations for each character, preserving realism and gameplay responsiveness.

For geometric asset processing, Tencent Games’ Photon Studio Group expert Wu Kui and collaborators also shared their joint work with Zhejiang University: “RL-AcD: Reinforcement Learning-Based Approximate Convex Decomposition.” This technique provides more efficient and compact geometric representations for complex scenes and character models by decomposing them into near-convex parts. Such decompositions form a critical foundation for collision detection and physics in highly interactive virtual worlds, helping engines scale to large, detailed environments without exploding computational costs.

In addition, Wu participated in another project titled “Kinetic Free-Surface Flows and Foams with Sharp Interfaces.” This research tackles a notoriously difficult problem in fluid simulation: achieving high efficiency and stability while still rendering rich foam details and sharp interfaces. The method makes it possible to simulate water, foam and splashes in a way that both looks convincing on screen and runs at practical speeds for real-time or near-real-time applications.

To address the challenge of rendering high image quality at high frame rates, Engine expert Yuan Yazhen and his team introduced fresh solutions targeting both traditional screens and immersive XR devices. One paper, “Predictive Sparse Shading for Continuous Frame Extrapolation,” proposes rendering only the visually complex regions of a frame under an extremely tight rendering budget. By predicting and concentrating shading work where it matters most, the method significantly improves frame stability and detail fidelity while preserving a high frame-rate experience for players.

For VR and AR scenarios, where rendering two high-resolution views is particularly demanding, the team presented “StereoFG: Generating Stereo Frames from Centered Feature Stream.” This method alternates rendering a low-resolution image for one eye and then uses a neural network to generate the other eye’s high-quality frame from a centered feature stream. In practice, this approach achieves up to a fourfold improvement in stereo rendering throughput, easing the performance bottleneck that often holds back immersive experiences.

AI and Games: A Natural Testbed for 3D Graphics and Interaction

ACM SIGGRAPH Executive Committee Chair and Peking University Professor Chen Baoquan emphasized that a key upgrade in this year’s conference is the focus on the crucial role of 3D graphics in AI and emerging industries. With their fusion of 3D graphics, physics simulation and real-time interaction, games naturally stand out as one of the core domains for AI development and application, both as testbeds and as large-scale deployment platforms.

Tencent Games has been systematically investing in AI training and application for games since 2016. Over the years, the company has been weaving AI into development pipelines to tackle technical challenges—while also building AI assistants, AI companions and other intelligent features in titles such as “Honor of Kings” and “Peacekeeper Elite.” These features are not just marketing slogans; they reshape how players learn, cooperate and experience game worlds.

By relying on games as highly realistic, strongly interactive environments, Tencent can bring cutting-edge AI technologies quickly from lab prototypes into live products, turning them into tangible experiences that millions of players actually feel. In doing so, users effectively become real-world adopters and beneficiaries of each incremental AI advance, rather than distant observers of abstract research.

Many of the frontier technologies Tencent Games shared at SIGGRAPH Asia 2025—from neural scene generation and AI animation pipelines to AI lighting, fluid simulation and stereo rendering—will ultimately prove their value in one place: the moment they are transformed into the next generation of game experiences that players can see, control and interact with directly.