发布时间: 12/30/2025

From December 15th to 18th, the Hong Kong Convention and Exhibition Centre (HKCEC) became the epicenter of digital innovation as it hosted the 18th ACM SIGGRAPH Asia. As the premier global academic gathering for computer graphics and interactive techniques, SIGGRAPH Asia 2025 embraced the theme "Generative Renaissance," spotlighting how artificial intelligence is fundamentally reshaping the creative industries. The event drew over 7,000 attendees and more than 600 speakers from around the world, with tech giants like Tencent, Huawei, and Adobe showcasing their latest breakthroughs in AI and graphics.

On the exhibition floor, Tencent hosted a series of technical workshops centered on "Neural Graphics and Generative AI," demonstrating the evolution of technology from academic papers to products serving millions. Tencent Games presented nine selected papers and three thematic sharing sessions, engaging with global AI and graphics experts on how technology is revolutionizing asset production and engine rendering.

AI Reshaping the Production Pipeline for Better Content

In the Technical Papers section, the Tencent Games technical team unveiled their research titled "Imaginarium: Vision-guided High-Quality Scene Layout Generation." This study tackles a major industry pain point: the time-consuming process of populating massive non-core areas in open-world games, such as urban streetscapes and natural vegetation. They proposed a novel method for generating high-quality 3D scene layouts guided by vision.

According to Zhu Xiaoming, a technical expert at Tencent Games, Imaginarium acts as an AI-based intelligent scene generation system. It interprets text instructions and learns to "think like a designer," automatically creating 3D scenes that are both aesthetically consistent and logically laid out. This is particularly effective for rapidly constructing vast background areas in game maps, allowing art teams to dedicate their energy to core creative designs.

Zhu Xiaoming presenting: "Imaginarium: Vision-guided High-Quality Scene Layout Generation"

The system's breakthrough lies in its ability to go beyond mere placement imitation. It understands the "why" behind the arrangement, inferring scene logic and narrative intent to achieve a level of "slow thinking" comparable to human experts.

Concept rendering generated from the prompt: "A cozy living room with a comfortable armchair, a gallery wall, and a stylish coffee table"

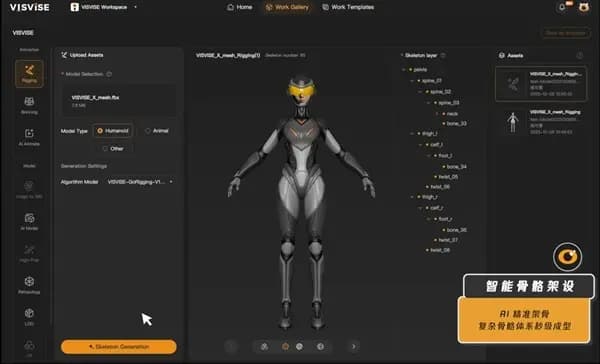

Following their showcase at Gamescom in Germany this past August, the VISVISE team from Tencent Games returned with their latest advancements, officially launching the industry's first AI-driven full-process 3D animation pipeline. Zeng Zijiao, the head of VISVISE AI Animation, delivered a speech titled "Giving Life to Geometry," detailing the iteration of their core technologies.

Zeng Zijiao presenting: "Giving Life to Geometry: The Full-Process AI Pipeline for 3D Character Animation"

Building on their original end-to-end solution, this pipeline integrates four key modules: skeleton generation, intelligent skinning, 3D animation generation, and smart frame interpolation. This creates a seamless workflow where creative outputs can be directly imported into existing game production lines, significantly boosting industrial efficiency. VISVISE also set up an interactive experience zone at the venue, demonstrating to attendees how to efficiently craft 3D character animations using their tools.

Currently, VISVISE's solutions are applied in nearly 100 game projects, including titles like *Peacekeeper Elite*, *Honor of Kings*, *PUBG Mobile*, *Golden Spatula*, and League of Legends Mobile. Data indicates that the application of this technology has increased production efficiency by over eight times.

VISVISE Operational Workflow Diagram

Intelligent Skeleton Rigging - Generation in Progress

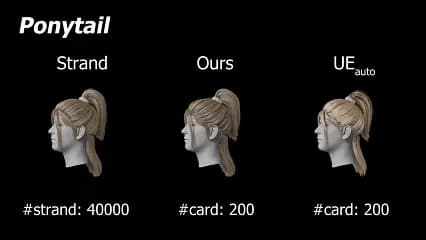

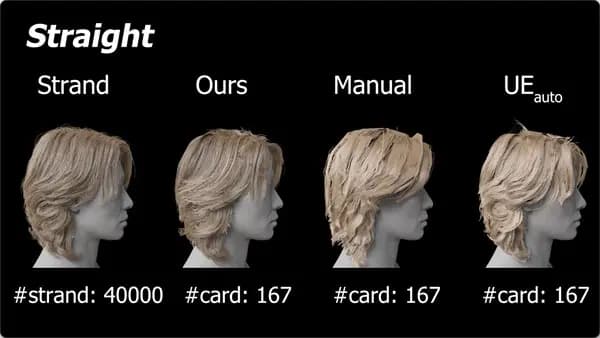

Beyond tools and AI rendering, Wu Kui and Zheng Zhongtian from Tencent's Lightspeed Studios shared insights in a technical paper presentation titled "Differentiable Rendering-based Automatic Hair Strip Extraction." They broke down the technology across asset decomposition, preprocessing, and differentiable rendering. This innovation allows for the automatic extraction of character hair strips while maintaining high quality, saving significant repetitive labor in specific art production stages.

Wu Kui and Zheng Zhongtian presenting: "Automatic Hair Strip Extraction Based on Differentiable Rendering"

In the realm of 3D modeling for games, traditional multi-view generation methods often struggle with geometric inconsistencies and difficulties in modeling complex topological structures. To address these challenges, a research team involving Tencent technical expert Weikai Chen introduced SPGen—a 3D shape generation method based on spherical projection ("Spherical Projection as Consistent and Flexible Representation for Single Image 3D Shape Generation").

SPGen projects the object's surface onto a bounding sphere, bypassing the view inconsistency issues inherent in traditional methods. This allows for precise reconstruction of complex geometries, leveraging powerful diffusion priors to significantly enhance model accuracy and generalization. Notably, it drastically reduces hardware requirements, needing only two GPUs with a total of 96G video memory for training. This enables developers to boost efficiency and easily handle complex 3D modeling, pushing the boundaries of intelligent game creation.

Simultaneously, another study involving Tencent expert Du Xingyi—"Lifted Surfacing of Generalized Sweep Volumes"—proposed a new framework for generating complex 3D sweep volumes. Developers can use this precise mathematical model to automatically generate non-self-intersecting, watertight 3D boundary surfaces. This improves modeling accuracy and provides reliable support for complex geometric and topological features, such as when designing intricate virtual environments and props.

AI + Game Engines: Optimizing Rendering for a New Visual Experience

Tencent Games showcased technical achievements covering core engine modules like lighting, animation, physical simulation, geometry processing, and real-time rendering. Through AI innovation, they aim to deliver more immersive and fluid interactive experiences.

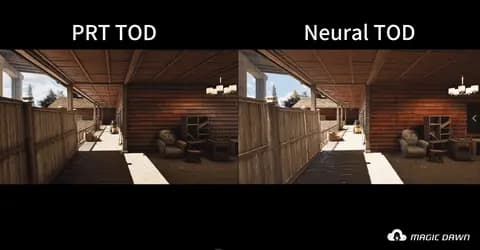

As computer graphics continue to revolutionize visual experiences, AI-driven in-game rendering has become a focal point. Li Chao, head of frontier rendering R&D at Tencent Games, shared the team's latest progress and deconstructed the core architecture of MagicDawn, their proprietary cross-engine lighting solution.

Li Chao sharing insights on "Frontier Exploration in Rendering Technology and Game Practice"

MagicDawn is a versatile solution capable of operating across different engines and supporting dynamic day-night cycles. By utilizing AI to drastically compress data volume and boost efficiency, it has successfully reduced the light baking time for large-scale games from days to mere hours. This allows mobile games to achieve realistic lighting effects comparable to AAA console titles.

Addressing the industry pain points of limited global illumination effects and long production times, MagicDawn offers an end-to-end answer from technology selection to large-scale implementation. The technology is currently successfully deployed in games such as *Arena Breakout*, *Arena Breakout: Infinite*, *Wuthering Waves*, and *Roco Kingdom World*.

Smart Rendering Effects Showcase

In the animation system domain, technical experts Xilei Wei, Lang Xu, and Yeshuang Lin from Tencent's Morefun Studios, alongside a team from Zhejiang University, presented research on "Ultrafast And Controllable Online Motion Retargeting For Game Scenarios." They innovatively proposed a "semantic-aware geometric representation" method. This effectively solves the challenge of quickly, naturally, and cost-effectively applying a set of movements to characters of different body types. With a single-frame processing time of just 0.13 milliseconds and precise control over hand-foot contact points and weapon interactions, it provides key technology for building vivid, large-scale game worlds.

Regarding geometric asset processing, experts like Wu Kui from Lightspeed Studios introduced "RL-ACD: Reinforcement Learning-Based Approximate Convex Decomposition," a collaboration with Zhejiang University. This tech offers a more efficient and compact geometric representation for complex scenes and character models, serving as a foundational block for massive, highly interactive virtual worlds. Additionally, Wu Kui's involvement in "Kinetic Free-Surface Flows and Foams with Sharp Interfaces" successfully tackled the difficulty of simulating fluids that are efficient, stable, and rich in foam details.

Facing the dual challenge of high image quality and high frame rates, a team involving engine expert Yuan Yazhen brought new solutions. Their "Predictive Sparse Shading-based Continuous Frame Extrapolation" significantly improves image stability and detail fidelity under extremely low rendering budgets by only rendering complex areas, ensuring a high frame rate experience. Furthermore, addressing the rendering bottlenecks in immersive VR/AR scenarios, "StereoFG: Generating Stereo Frames from Centered Feature Stream" innovatively alternates rendering a low-resolution image for one eye while using neural networks to synchronously generate a high-quality image for the other, achieving a fourfold increase in stereo rendering output efficiency.

Closing Thoughts

Chen Baoquan, the current Director of the ACM SIGGRAPH Executive Committee and a professor at Peking University, noted that the core upgrade of this year's conference lies in the "critical role of 3D graphics in the development of AI and emerging industries." Games, which fuse 3D graphics, physical simulation, and real-time interaction, naturally serve as a core field for AI development and application.

Since 2016, Tencent Games has systematically laid out the training and application of AI in gaming. Beyond integrating AI into R&D pipelines to solve technical hurdles, they have developed new features like AI intelligent assistants and AI combat dogs in products like *Honor of Kings* and *Peacekeeper Elite*, innovating the player experience. Relying on the high-fidelity, strong-interaction scenarios of games, frontier AI technology is rapidly landing and translating into perceptible experiences, making millions of users the actual beneficiaries of AI evolution. The ultimate value of the cutting-edge technologies shared by Tencent Games at this conference will be realized in how they transform into the next generation of tangible, interactive game experiences for players.